RDR @ RightsCon 2018

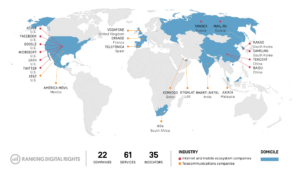

This week, members of the Ranking Digital Rights team will be in Toronto, Canada, for the sixth annual RightsCon conference, organized by Access Now. We are organizing and participating in several RightsCon sessions, and are looking forward to discussions with human rights and technology advocates