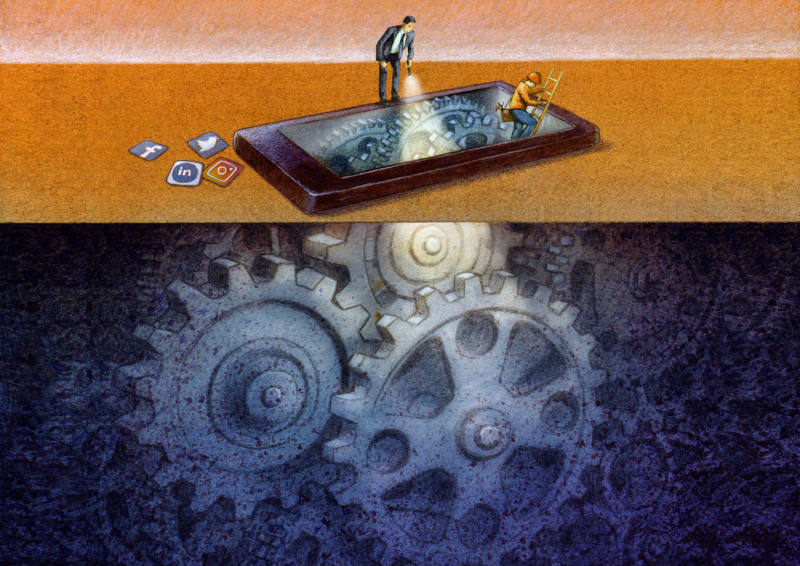

Original art by Paweł Kuczyński

As the 2020 U.S. presidential campaign continues amid a pandemic with no precedent in living memory, politicians on both sides of the aisle are understandably eager to hold major internet companies accountable for the spread of disinformation, hate speech, and other problematic content. Unfortunately, their proposals focus on pressuring companies to purge their platforms of various kinds of objectionable content, including by amending or even revoking Section 230 of the 1996 Communications Decency Act, and do nothing to address the underlying cause of dysfunction: the surveillance capitalism business model.

Today we’re publishing a new report, “It’s Not Just the Content, It’s the Business Model: Democracy’s Online Speech Challenge,” that explains the connection between surveillance-based business models and the health of democracy. Written by RDR Senior Policy Analyst Nathalie Maréchal and journalist and digital rights advocate Ellery Roberts Biddle, the report argues that forcing companies to take down more content, more quickly is ineffective and would be disastrous for free speech. Instead, we should focus on the algorithms that shape users’ experiences.

In the report, we explain how algorithms determine the spread and placement of user-generated content and paid advertising, but they share the same logic: showing each user the content they are most likely to engage with, according to the algorithm’s calculations. Another type of algorithm performs content moderation: the identification and removal of content that breaks the company’s rules. But this is no silver bullet, as these tools are unable to understand context, intent, and other factors that are key to whether a post or advertisement should be taken down.

We outline why today’s technology is not capable of eliminating extremism and falsehood from the internet without stifling free expression to an unacceptable degree. While we accept that there will never be a perfect solution to these challenges, especially not at the scale at which the major tech platforms operate, we assert that if they changed the systems that decide so much of what actually happens to our speech (paid and unpaid alike) once we post it online, companies could significantly reduce the prevalence of disinformation and hateful content.

At the moment, determining exactly how to change these systems requires insight that only the platforms possess. Very little is publicly known about how these algorithmic systems work, despite their enormous influence on our society. If companies won’t disclose this information voluntarily, Congress must intervene and insist on greater transparency, as a first step toward accountability. Once regulators and the American public have a better understanding of what happens under the hood, we can have an informed debate about whether to regulate the algorithms themselves, and if so, how.

This report is the first in a two-part series and relies on more than five years of research for the RDR Corporate Accountability Index as well as the findings from a just-released RDR pilot study testing draft indicators on targeted advertising and algorithmic systems.

The second installment, to be published later this spring [now available here], will examine two other regulatory interventions that would help restructure our digital public sphere so that it bolsters democracy rather than undermines it. First, national privacy legislation would blunt the power of content-shaping and ad-targeting algorithms by limiting how personal information can be used. Second, requiring companies to conduct human rights impact assessments about all aspects of their products and services—and to be transparent about it—will help ensure that they consider the public interest, not just their bottom line.

We had to cancel our planned launch event due to the novel coronavirus, but we’ll be organizing webinars to discuss why we think #itsthebusinessmodel we should pay attention to, not just the content.

Please read the report, join the conversation on Twitter using #itsthebusinessmodel, and email us at itsthebusinessmodel@rankingdigitalrights.org with your feedback and to request webinar for your organization.

We would like to thank Craig Newmark Philanthropies for making this report possible.