Context before code: Protecting human rights in a state of emergency

By Jan Rydzak & Elizabeth M. RenierisThe COVID-19 pandemic has brought about a multitude of crises that stretch far beyond the realm of public health. In conflict areas like Yemen, the disease compounded already dire circumstances for civilians seeking protection from ongoing violence. For students around the world, it laid bare the meaning of the digital divide: those with reliable internet access have been able to keep up with schooling, and those without have fallen behind. In the tech sector, while profits have soared, the spread of algorithmically-driven disinformation about the virus has brought fatal consequences to people around the world.

While the digital platforms we rank were all prepared to seize the moment and profit from the circumstances created by the pandemic, all the companies were caught off guard by the impact of COVID-19 on their own users. Yet they have all weathered crises before. Telcos have raced to repair infrastructure in the wake of natural disasters. Platforms have grappled with government censorship orders in the face of political upheaval.

The way a company responds to a crisis does not just affect its bottom line. It can have profound implications for the fundamental rights of millions, if not billions, of people, whether or not they are “users” of a product or service that the company provides.

The year 2020 could not have given us a better set of case studies in just how dangerous it is for these companies to be so unprepared for the human impact of crisis.

And yet tech juggernauts are expanding their monopolies as custodians of user data and gatekeepers of access to content with no more accountability than before.

In moments of crisis, companies can enable human rights violations, or they can try to mitigate them by following cornerstone business and human rights practices, like providing remedy to the affected and finding real ways to prevent further abuse.

How can companies shape their policies and practices so that they are prepared—rather than blindsided—when the next crisis strikes? We looked at a few key examples from the past year to help answer this question.

No network, no peace

From Azerbaijan to Myanmar to Zimbabwe, network shutdowns have become a knee-jerk government response to conflict and political upheaval. In 2019 alone, governments around the world ordered approximately 213 network shutdowns, many of them designed to be indefinite.

In a network shutdown, the mass violation of freedom of expression is typically only the first in a cascade of human rights harms that follow. People are rendered unable to communicate with loved ones, obtain vital news and health information, or call for help in emergencies, putting their right to life in danger. Shutdowns can also foment violence, hide evidence of killings, or even send the disconnected directly into the line of fire.

The 12 telecommunications companies in the RDR Index operate in 125 countries. In 2020, seven of these companies were known to have executed government-ordered network shutdowns, either directly or through their subsidiaries. Two cases stand out: Telenor, a dominant provider in Myanmar, cut off internet access for more than a million people in Myanmar’s Rakhine and Chin states, and kept it off at the government’s behest. In India, millions of residents of Kashmir have lived under digital siege since mid-2019, thanks in part to a shutdown executed by Bharti Airtel and its peers at the order of the Modi government.

Marginalized people in both conflict zones have suffered doubly from COVID-19 and communication disruptions. But the companies carried these orders out in starkly different ways that had measurable impacts for customers.

In Myanmar, while Telenor complied with government orders, the company publicly opposed the blackout and published detailed information about the shutdown, identifying the order’s legal basis and responsible authorities. As the government repeatedly extended the blackout, the company continued to release updates. Telenor also injected more transparency on shutdowns into its annual report on authority requests and mitigated the risk to lives and livelihoods by reducing international call rates, enabling people to more easily make calls in the absence of VoIP apps like WhatsApp.

A Tale of Two Shutdowns

The 12 telecommunications companies in the RDR Index operate in 125 countries.

In 2020, seven of these companies were known to have executed government - ordered network shutdowns, either directly or through their subsidiaries.

They include Bharti Airtel (India, Chad, Tanzania), Orange (Guinea, Jordan), Telenor (Myanmar), Vodafone (Turkey, India via Vodafone Idea), MTN (Sudan, Syria, Guinea, Yemen), Etisalat (Tanzania via ZanTel), and América Móvil (Belarus via A1 Belarus / A1 Telekom Austria Group).

Two cases stand out:

Telenor, a dominant provider in Myanmar, cut off internet access for more than a million people in Myanmar’s Rakhine and Chin states, and kept it off at the government’s behest.

By contrast, India’s Bharti Airtel has exercised an apparent policy of silence, reporting no information about the order, or data on shutdowns. In India, the world’s most frequent purveyor of this extreme form of digital repression, such corporate inertia can trigger hopelessness among those who are perpetually disconnected.

Telenor’s response shows how transparency can form a breakwater against network shutdowns. When they receive a shutdown order, we urge companies to make this information public. But they must also take a stand and create friction. Every excessive order should be met with pushback, and companies should alert users about impending blackouts instead of abruptly thrusting them into digital darkness.

Incitement gone viral

When social media posts inciting political violence go viral, the consequences can be fatal. There will always be bad actors on the internet. But research has shown that companies’ algorithmic systems can drive the reach of a message by targeting it to people who are most likely to share it, influencing the viewpoints of thousands or even millions of people.[1]

Facebook itself has published research on its operations in Germany showing that 64 percent of the time, when people join an extremist Facebook Group, they do so because the platform recommended it.

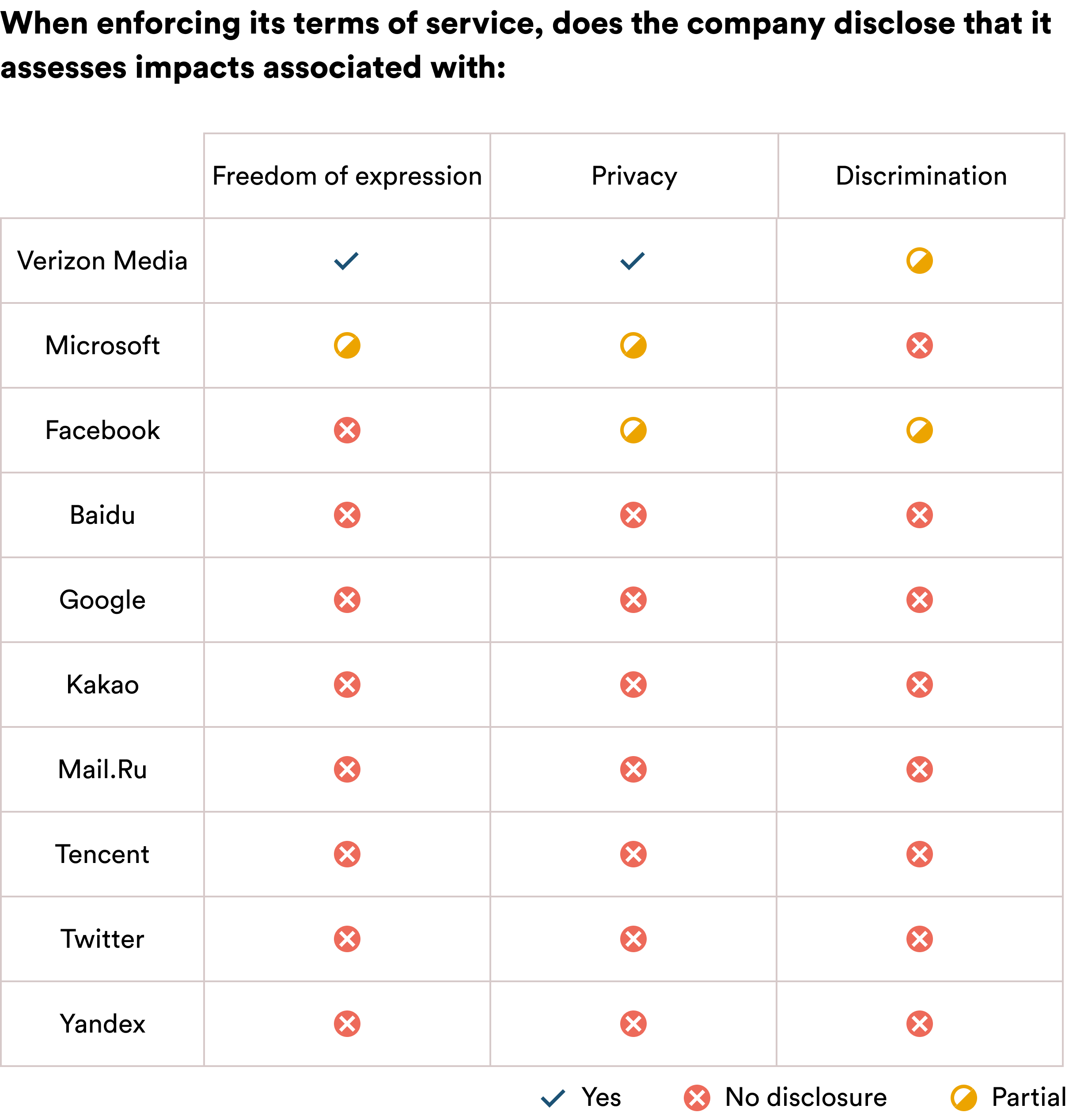

Structured human rights impact assessments are rapidly gaining acceptance as a model for evaluating the harms that a company may cause or contribute to in different contexts. Strong due diligence can help predict, for instance, the rise of fringe movements in social media communities or the likelihood of coordinated extremist violence moving from online spaces into real life. Yet only four companies we ranked appeared to conduct impact assessments of their own policy enforcement, where these kinds of threats often arise.

Companies cannot build resilience to shocks without bridging these gaps, and it is virtually certain that more governments will soon make conducting due diligence compulsory for companies. We urge companies to conduct these assessments before they launch new products or services, or enter new markets, to mitigate harms before they happen.

Companies are erratic and opaque about how they implement their human rights commitments in ordinary times, but these things can become even murkier in volatile situations, and the consequences are often most severe for marginalized communities. When a social media-driven crisis unfolds, companies often fail to respond unless they perceive a risk to their reputation. This encourages the proliferation of “scandal-driven” human rights due diligence that, at most, helps to survey or contain the damage rather than prevent it.[2]

In Sri Lanka in 2018, rampant hate speech on Facebook helped instigate a wave of violent attacks, mostly targeting Muslims, who represent a minority in the predominantly Buddhist country. Following bitter criticism from civil society and coverage by major media including the New York Times, Facebook commissioned a third-party assessment of its operations in Sri Lanka. In May 2020, the company released an abridged version of the assessment, alongside two others it had commissioned two years prior.

While this marked a step in the right direction, the public documentation showed little evidence that the assessors had investigated how Facebook’s ranking and recommendation algorithms helped incite communal violence and exacerbate other harms. In its response to two of the assessments, Facebook addressed this issue in the most skeletal way, claiming only that certain engagement-driving algorithms were “now phased out.”

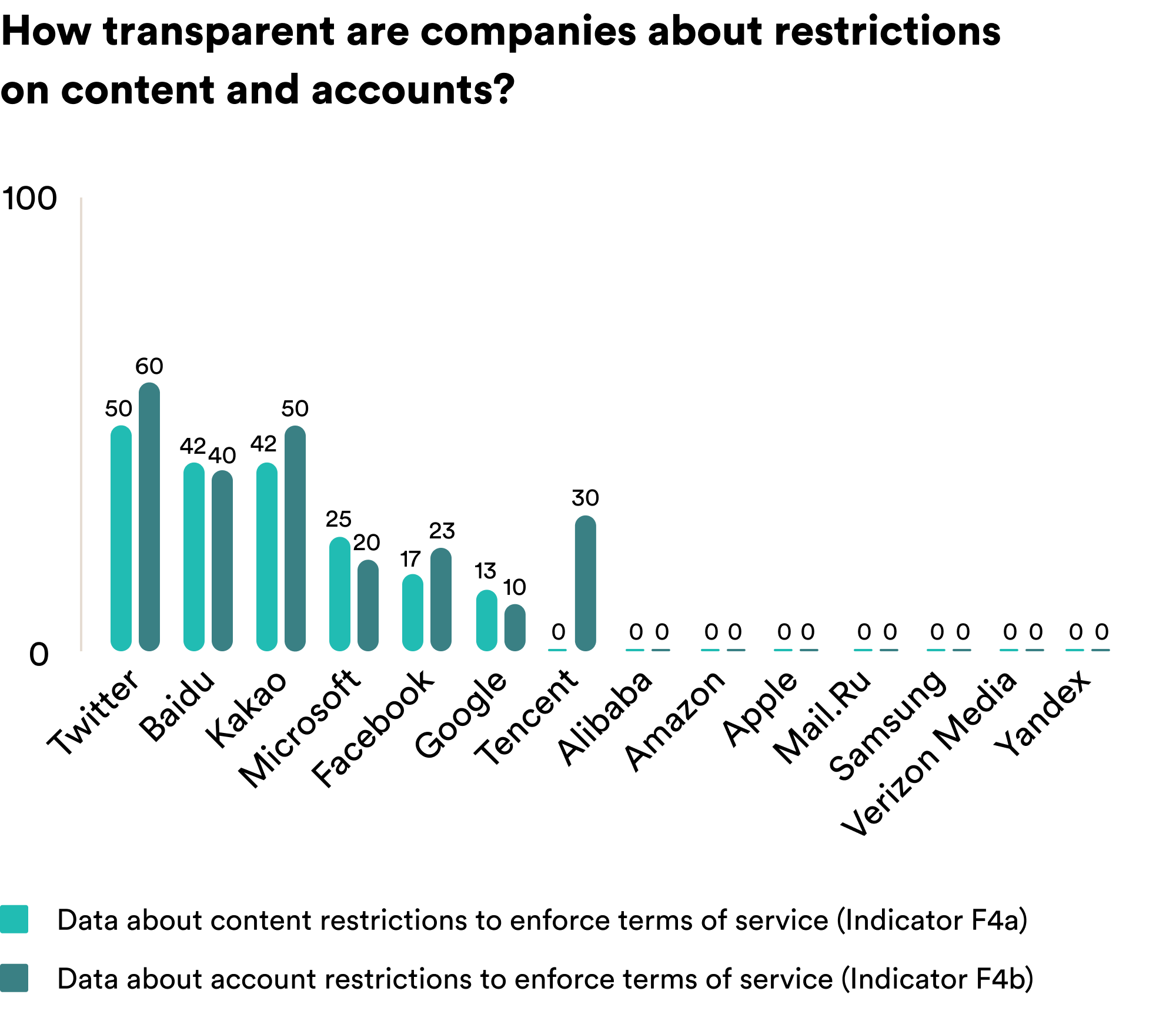

Companies also need to publicly report how they carry out these actions. Here too, the companies in the RDR Index are falling short. Facebook’s Community Standards Enforcement Report, for example, does not include the total volume of restricted content or accounts, enforcement by restriction type, or country-specific information.

Companies must include algorithmic transparency in their reporting as well. They should publish rules and policies covering their approach to algorithms and targeted advertising as the basis for their transparency reporting and corporate due diligence.

More algorithms, more appeals

When it comes to content moderation, companies will always make mistakes. Given the scale at which digital platforms operate, there is simply no way to protect everyone from the harms that can arise from online content and companies’ censorship decisions. This is why a user’s right to remedy, or to appeal a company’s content decisions, is so important.

Losing access to remedy can leave people in a sort of information and communication limbo. When accounts are wrongfully suspended, people lose what might be their only remote communication tool. On the content side, we’ve seen companies mistakenly censor everything from vital public health information, to calls for protest, to evidence of war crimes.

When the COVID-19 pandemic struck, Facebook sent home workers who review millions of posts each day for rule violations. The company decided to put its algorithms in charge instead, hoping the technology would keep content moderation processes moving. But the solution had at least one critical flaw: it was unable to address appeals. In an August 2020 press call, Facebook explained that it had severely scaled back the processing of new appeals sent by disgruntled users, indicating that the company’s experiments with algorithmic moderation had not worked out so well. The system’s apparent collapse left millions of users unable to appeal moderation decisions at all.

The story at YouTube was different. Like Facebook, Google (YouTube’s parent company) was forced to send workers home, also “relying more on technology” to carry out content moderation. Google even offered a statement saying that the change might result in the company “removing more content that may not be violative of our policies.” But Google also thought ahead and ramped up its appeals systems, anticipating that algorithmic content moderation would lead to more wrongful removals and more appeals from users. Indeed, appeals doubled in the same period. But because the company took measures to prepare, the number of videos restored on appeal quadrupled.

Appeals in Crisis:

Facebook vs. YouTube

Ordinary times: Q4 2019

Between October and December 2019, YouTube users appealed the removal of 10k videos. About 20% of these videos were restored on appeal.

In the same period, Facebook reported that it had received appeals on nearly six million pieces of content or accounts that the company had restricted. About 16% were restored on appeal.

Ordinary times: Q1 2020

The two platforms maintained similar dynamics in the next quarter. YouTube reversed 25% of the restrictions it had imposed upon appeal and Facebook reversed 20%. But then the unexpected happened.

Facebook in COVID times

When the COVID-19 pandemic struck, Facebook sent home workers who review millions of posts each day for rule violations.

The company decided to ramp up its use of algorithms for content moderation, hoping the technology would keep its content review processes moving.

Facebook in COVID times

Facebook in COVID times

In an August 2020 press call, Facebook explained that it had severely scaled back the processing of new appeals sent by disgruntled users. The system’s apparent collapse left millions of users unable to appeal moderation decisions at all.

In both the second (April—June) and the third quarter (July—September) of 2020, Facebook users were only able to file approximately 200,000 appeals—3 percent of the number of appeals submitted in the first three months of 2020.

YouTube in COVID times

YouTube in COVID times

Google also thought ahead and ramped up its appeals systems, rightly anticipating that algorithmic content moderation would lead to more wrongful removals and more appeals from users.

Indeed, appeals doubled in the same period. But because the company took measures to prepare, the number of videos restored on appeal quadrupled in relation to the first three months of 2020.

How can companies prepare for crisis?

No person or company can anticipate every harm that will arise in an emergency. But there is a significant distance between this zone of impossibility and the lack of preparedness that many companies exhibited in 2020. Our indicators, which draw extensively from international human rights doctrine and the expertise of human rights advocates around the world, offer a path forward.

Whether circumstances are ordinary or extraordinary, companies should pursue three objectives (among many others) if they seek to improve.

1. Publicly commit to human rights

Companies must make a public commitment to respect human rights standards across their operations.[3] Of course, a policy alone will not improve performance. As our 2020 data showed, many companies in the RDR Index committed to human rights in principle, but failed to demonstrate these commitments through the practices that actually affect users’ rights. These kinds of commitments can serve as a tool for a company to measure and document violations, and for advocates to push for better policies at the product and service level.

Take Apple, which was one of the most improved companies in the 2020 Index, largely due to a new human rights policy that the company published after years of sustained pressure by RDR and others. When Apple delayed the rollout of pro-privacy, anti-tracking features in iOS 14, advocates (including RDR) were able to use the new policy to hold the company to account.

2. Double down on due diligence

Large multinational companies like the ones in our index can no longer plead ignorance. They must reinforce their policy commitments by putting human rights at the center of their practices, and carrying out robust human rights due diligence.

Companies that conduct human rights impact assessments should see early warning signs when political or social conflict is on the horizon, and they can act on this by enhancing scrutiny of online activity, stanching the spread of inciting or hateful content, or even notifying authorities, where appropriate. When chaos erupts, a company with strong due diligence processes in place can quickly identify how its platform may be used to cause harm and activate protocols to protect potential victims.

3. Pull back the curtain and report data

Companies can and do report some data on their enforcement of their own policies, along with compliance with government censorship and surveillance demands, in the form of transparency reports.

While 16 companies in the RDR Index offer some kind of transparency report (and two others have begun doing so since our research cutoff date), none of these companies does nearly enough to show users, civil society, or policymakers what’s really happening behind the curtain.

Only five companies in the 2020 RDR Index published data about enforcement of their own policies and only six revealed data on government censorship demands. Even existing transparency reports suffer from major blind spots. Facebook’s Community Standards Enforcement Report, for example, does not include the total volume of restricted content or accounts, enforcement by restriction type, or country-specific information.

Together, such gaps can deprive users and researchers of the ability to map enforcement surges to specific events, especially as companies expand their arsenal of enforcement actions. Greater clarity here would brighten what remains a grim landscape.

Better late than never

Without preparedness, crises spin out of control. Understaffed hospitals and truncated national pandemic response teams fail to harness the outbreaks of diseases, security forces fail to protect core institutions overwhelmed by frenzied mobs, and social media companies fail to quash online threats of violence before they lead to real-life harm.

Companies can address these harms by anchoring all of their actions in international human rights standards. Companies that build respect for human rights into their policies and products from the start will always be better prepared to face uncertainty than those that do not.

As we continue to grapple with a global crisis that has kindled innumerable local fires, companies continue to make ad hoc decisions that affect millions. They are late to embrace these standards. But late is better than never.

Footnotes

[1] Siva Vaidhyanathan, Antisocial Media: How Facebook Disconnects Us and Undermines Democracy(New York: Oxford University Press, 2018).

[2] See Kendyl Salcito, “Company-commissioned HRIA: Concepts, Practice, Limitations and Opportunities,” in Handbook on Human Rights Impact Assessment, ed. Nora Götzmann (Northampton, MA: Edward Elgar Publishing, 2019), 32–48.

[3] See Lucy Amis and Ashleigh Owens, A Guide for Business: How to Develop a Human Rights Policy, 2nd ed. (New York: UN Global Compact and Office of the United Nations High Commissioner for Human Rights, 2015), available at FHR_Policy_Guide_2nd_Edition.pdf

Tech companies wield unprecedented power in the digital age. Ranking Digital Rights helps hold them accountable for their obligations to protect and respect their users’ rights.

As a nonprofit initiative that receives no corporate funding, we need your support. Help us guarantee future editions of the RDR Index by making a donation. Do your part to help keep tech power in check!

Companies are improving in principle, but failing in practice

See how company scores on specific services compare

Big Tech’s unaccountable algorithms