Key Findings

User data fuels Big Tech's algorithms, and there's no opting out

User data fuels Big Tech's algorithms, and there's no opting out

By Samantha Ndiwalana

Although AI has never commanded as much attention as it has in the last couple of years, AI, and its algorithms, are not new to the Big Tech companies assessed by RDR. In fact, AI has been integrated into the design of many major tech company features for more than a decade Predictive text, for example, is a type of AI that has long been integrated into messaging services like WhatsApp and email services such as Gmail and Outlook.com.

Algorithms are the engine that drive the AI system. Algorithms support decision-making systems that influence content visibility on social media platforms and drive the recommendation engines of digital assistant suggestions. This includes, for example, entertainment options, such as which movie or TV show to watch based on personal preferences. Algorithms are also employed in optimizing physical environments, such as determining the most efficient layout of supermarket aisles. These functions are made possible thanks to data collected by Big Tech companies from users through their use of digital services. While technology companies claim that data collection and algorithmic processing enhance the user experience, this narrative discounts the significant human rights implications of this technology, particularly with respect to freedom of expression and privacy.

The risks associated with algorithms can be broadly categorized into four key areas: eroding privacy, undermining autonomy, deepening equality, and impairing safety. Despite these concerns, digital companies are rushing to further integrate AI into their business without fundamental consideration for algorithmic safety and responsible practices. For example, in 2024, Meta faced global backlash over changes to its privacy policy that empowered the company to employ years of user data to train its AI models without users’ consent—a clear example of “eroding privacy.’' In February 2025, Alphabet updated its AI Principles, backtracking on previous commitments against developing AI for weapons and surveillance—a concerning example of “impairing safety.”

It’s the business model: Algorithms that are harmful by design

Algorithms have always been a critical component of Big Tech’s business model, even before the explosion of AI. Once again, this extractive business model relies on the collection of an inordinate amount of personal data to power its algorithms. The algorithms companies deploy are built to keep people scrolling on social media sites and assistant services for longer periods of time, creating a cycle in which more user data is generated that can be used to generate more profit. Data is then used for advertising by companies wishing to target and sell to specific users. For companies like Alphabet, advertising is often the most lucrative revenue stream. However, algorithms’ potential to create further profits for Big Tech has grown stronger as the hype surrounding AI intensifies. This is creating an environment in which companies are rushing to include algorithms for AI in every product they can, often at the expense of users’ safety.

In 2020, RDR introduced new disclosure standards for the development and deployment of targeted advertising and algorithmic systems. These standards have allowed our research to shine a brighter light on company practices related to the advertising and algorithms that fuel Big Tech’s extractive business model. When these indicators and elements were first integrated into the 2020 RDR assessment, most companies’ overall scores decreased as the majority shared close to nothing about how they used algorithms, and even fewer disclosed how they developed and trained their systems.

Of all the services assessed by RDR, Big Tech’s social media and virtual assistant services make the most widespread use of algorithms and targeted advertising as part of their business model. We delve more into each below.

Virtual assistant services

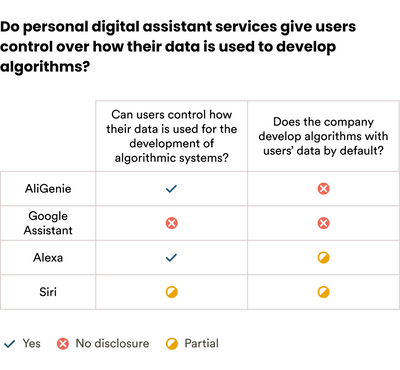

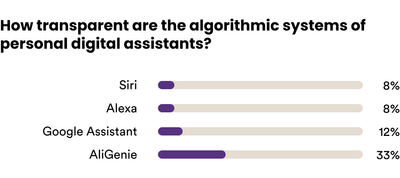

The widespread use of virtual assistants in homes, offices, and mobile devices is increasing the risk of user harm, such as privacy erosion. For example, in January 2025, Apple paid USD 95 million to settle a class action lawsuit that alleged that the company recorded private conversations and shared the data with third parties. Notably, two of the four virtual assistants assessed by RDR incorporate users’ data in the development of algorithms by default. AliGenie even denies users the option to opt out. Meanwhile, Google Assistant is currently built into more than 1 billion devices; yet it provides no disclosure on how users can control the use of their data for algorithmic development. In 2022, China passed an algorithm filing regulation that required providers of algorithmic recommendation systems with strong potential to influence public opinion to submit basic information about their algorithmic models to the government. In response, Alibaba shared the core principles behind the major algorithms used on its key platforms, including AliGenie, with its users.

Among the four digital assistants evaluated, Alibaba’s virtual assistant AliGenie scored the highest on algorithmic transparency. Meanwhile, Alexa, Amazon’s virtual assistant, tied with Apple’s Siri at the bottom of the pile. However, Amazon and Apple are the only companies that allowed users to control how their data is used to train algorithms for their digital assistants. While Alibaba, Amazon, Apple, and Alphabet all had ethical AI principles in place in 2024, the scope of these principles and the companies’ algorithmic transparency varied. For example, only Alibaba and Alphabet included policy commitments to human rights in the development of their algorithms. However, these commitments were limited in scope and often unclear. Furthermore, despite its AI principles, in 2024, Amazon was fined by the U.S. Federal Trade Commission for retaining children’s voice recordings on Alexa devices even after parents requested that the data be deleted.

Social media services

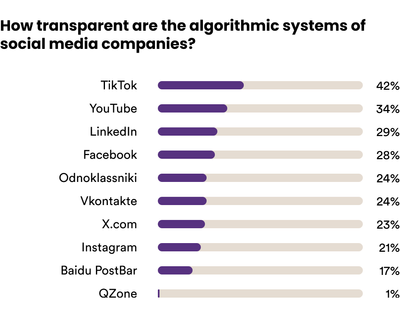

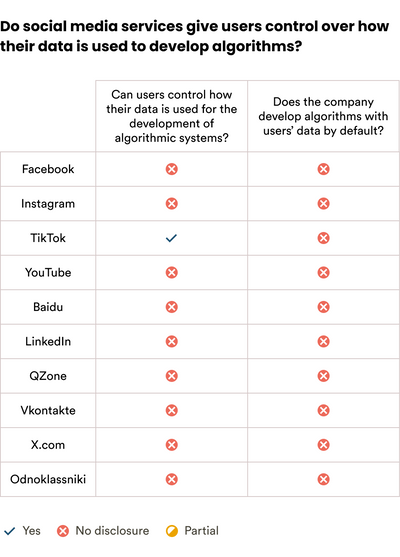

Social media[1] is, of course, another omnipresent service provided by many of the tech giants we assess. These platforms influence how people connect with each other and work, how (dis)information spreads, and, increasingly, they also impact our democracies. Social networking, photo-sharing, and video-sharing services have a wide global reach. In January 2025, close to 2.28 billion people worldwide used Facebook. As of February 2025, advertisements on Facebook reached approximately 41 percent of the world’s internet users. The EU AI Act obligates providers of very large online platforms, such as YouTube, Facebook, LinkedIn, and TikTok, to assess potential systemic risks that may arise from the design, functioning, and use of their services, including how algorithms might contribute to those risks. Of the ten social media services assessed by RDR, TikTok had the most transparent disclosure on algorithmic development, as it clearly described how its recommendation system works. In addition, it was the only social media service that clearly disclosed that it incorporates user data into the development of its algorithms by default.

Most companies did not disclose whether they provide users with options to control how their information is used to develop algorithms. Without transparency on how companies are designing and deploying their algorithms, users remain vulnerable and at risk of harm. Their personal information can be used to insidiously influence their behavior, whether over something as minor as a small online purchase or something as consequential as who they vote for. Overall, the poor performance of these services signals a lack of accountability and poses a clear danger to the users who have put their digital lives in the hands of these companies.

But are companies doing any better on these indicators than when we first assessed them? Since 2020, Meta has partially improved its transparency in assessing the freedom of expression risks associated with the development and use of its algorithmic systems. Likewise, Alphabet, as part of its 2022 AI Principles, shared for the first time that it will incorporate its privacy principles into the development and use of its AI. In terms of assessing discrimination risks in its algorithmic systems, Microsoft’s transparency declined in 2025 relative to 2022, with no disclosure found on the topic, while Alphabet and Tencent’s transparency improved just slightly. In 2025, as in 2022 and 2020, fewer than half the companies were found to conduct human rights impact assessments on their algorithmic systems. Furthermore, in 2025, only Samsung disclosed an explicit, clearly articulated human rights policy commitment for its development and use of algorithmic systems.

Big Tech companies still have a long way to go to ensure they are taking the algorithmic safety of their users seriously. To do so, they will need to prioritize transparency, ethics, and safety throughout the algorithm development process. Legislation like the EU AI Act is encouraging, as governments now have an important tool with which to hold companies accountable. However, companies that are serious about protecting users’ rights should not wait for legislation before they act. Still, without regulation or stakeholder action, Big Tech companies will continue to use algorithms to pursue their extractive business model. A combination of regulation and stakeholder action, such as investor shareholders voting in the interest of user safety, can compel companies to look beyond being simply the fastest in the AI race, driven by the “move fast and break things” mentality, and push them, instead, toward a race where safety and innovation go hand in hand.

Footnotes

[1] Social media companies assessed by Ranking Digital Rights include Social Network and Blogs services: Facebook, LinkedIn, X.com, Baidu PostBar, Vkontakte, QZone and Odnoklassniki; as well as Video and Photo Sharing services: YouTube, TikTok and Instagram