This category examines the extent to which companies disclose concrete commitments and efforts to respect users’ freedom of expression. Companies that perform well here demonstrate a strong public commitment to transparency, not only in terms of how they comply with laws and regulations or respond to government demands, but also how they determine, communicate, and enforce private rules and commercial practices that affect users’ freedom of expression.

In this category, Internet companies generally received higher scores than telecommunications companies. This is due in part to the different nature of the services: telecommunications services are a conduit for speech and content, while Internet companies (among other functions) serve as a platform through which speech is shared publicly and privately. Internet companies everywhere impose restrictions on the activities and expression of users, either at the demand of private parties or through enforcement of their own terms of service. What is not universal is the extent to which they are transparent about these practices, and the extent to which these practices adhere to international human rights standards.

For telecommunications companies, the primary means of restricting user expression and access to information are the blocking or filtering of websites or network shutdowns in particular geographic areas. While such practices are common in some jurisdictions, they are much less so in others. Nonetheless, there is a risk in all jurisdictions for telecommunications companies to infringe upon users’ freedom of expression. Therefore, we take the position that assessing all telecommunications companies on freedom of expression criteria is appropriate and indeed necessary.

Among telecommunications companies, Vodafone was the relative leader on disclosed policies and practices that affect users’ freedom of expression, although it received credit for less than 50 percent of the total possible score. It was followed by fellow Industry Dialogue member AT&T. Orange, the third Industry Dialogue member in the Index, followed distantly at 29 percent. The remaining companies ranged between 16-27 percent of total possible points.

Network management: Indicator F10, which examined network management practices, applied only to telecommunications companies. It asks if a company discloses whether it prioritizes or degrades transmission or delivery of different types of content, and if so for what purpose. Effectively, it seeks disclosure on whether the company does or does not adhere to principles of net neutrality, and if not, why.

Of the eight telecommunications companies evaluated, only Vodafone disclosed that it does not prioritize or degrade the delivery of content (in the United Kingdom). Companies that provided no disclosure whatsoever for their home markets were Etisalat, MTN, and Orange. The others disclosed to varying extents that they prioritize or degrade content delivery in their home markets, and they explained their purpose for doing so (e.g., throttling speeds after users consume a certain amount of data).

Among Internet companies, Google’s disclosed policies and practices that affect users’ freedom of expression earned the company 68 percent of the possible total score, which is about ten percentage points higher than the next companies, Kakao and Twitter, who nearly tied at 59 percent and 58 percent, respectively. Yahoo earned slightly above 50 percent of total possible points for freedom of expression. Microsoft’s lower score at 46 percent was due to the fact that until mid-October 2015, its transparency reporting did not include information about content restriction. On October 14, 2015, too late for inclusion in the Index, Microsoft published an updated version of its transparency report, which for the first time included data on content removal requests. This disclosure will be evaluated in the next iteration of the Index.

Facebook’s score was brought down by the lack of disclosure (and poorer quality of policies) for two of its services that are used by hundreds of millions of people around the world: Instagram and WhatsApp. (See Facebook’s company report for more details.)

Disclosure of rules: Many, but not all, companies performed well on the indicator examining the availability of terms of service (F1). However, scores were much lower for indicator F2, which examines whether companies provide users with notice and a record of changes to those terms. This indicator expects companies to clearly commit to notify their users of changes to the terms of service and to maintain a log of those changes. Many companies objected to these expectations. Some argued that sending too many notifications to users and publishing archives of changes creates more confusion than clarity. Companies do not all agree, however. Kakao received perfect scores for two services, Daum Search and Daum Mail, on this indicator.

On two indicators in this category, all companies received at least some points, and companies that otherwise received low overall scores performed relatively well. Indicator F3 asks, “Does the company disclose whether it prohibits certain types of content or activities?” and indicator F4 asks, “Does the company explain the circumstances under which it may restrict or deny users from accessing the service?” The point of these indicators is that companies should be clear with users about what their rules are and how they enforce those rules. Interestingly, companies headquartered in countries where Internet censorship is documented to be relatively extensive tended to score fairly well on these indicators – presumably due to their need to demonstrate compliance with legal restrictions on speech. For more information, see the company reports in Section 5 as well as the company and indicator pages on the project website.

Private enforcement: the black box

Indicator F9 asks, “Does the company regularly publish information about the volume and nature of actions taken to enforce the company’s own terms of service?” As previously mentioned in the Key Findings section, no company received any credit on this indicator.

Several companies told our researchers in private communications that publishing data about the volume and type of content removed in the course of enforcing terms of service (e.g., against hate speech, harassment, incitement to violence, sexually explicit content, etc.) would not, in their view, help promote freedom of expression. Some argued that too much transparency about such enforcement would enable criminals and people seeking to harm other users to more effectively “game” the system, while others argued that private enforcement also includes fighting spam, about which it supposedly would not be meaningful to provide insight.

Yet at the same time, civil society groups in a range of countries have raised concerns that companies enforce their terms of service in a manner that is opaque and often viewed as unfair to certain groups. Such problems indicate that for companies to maintain or establish legitimacy as conduits for expression, they must also offer greater transparency and accountability in relation to how they police users’ content and activities. The score of zero across the board on this indicator highlights the need for dialogue among companies and other stakeholders about what reasonable steps companies can and should take to be more transparent and accountable about how they enforce their terms of service.

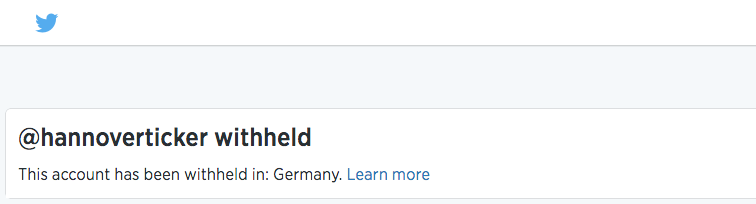

Notifying users of restrictions: Indicator F5 asks, “If the company restricts content or access, does it disclose how it notifies users?” The indicator seeks company commitments to notify users who are blocked from accessing all or part of a service, are blocked from viewing content, or when they are trying to view content that has been removed from the service entirely. To receive credit on this indicator, such disclosure must be accessible to people who are not signed up or subscribed to the service. Twitter, for example, explains how it notifies users when they are prevented from viewing “country withheld content.”

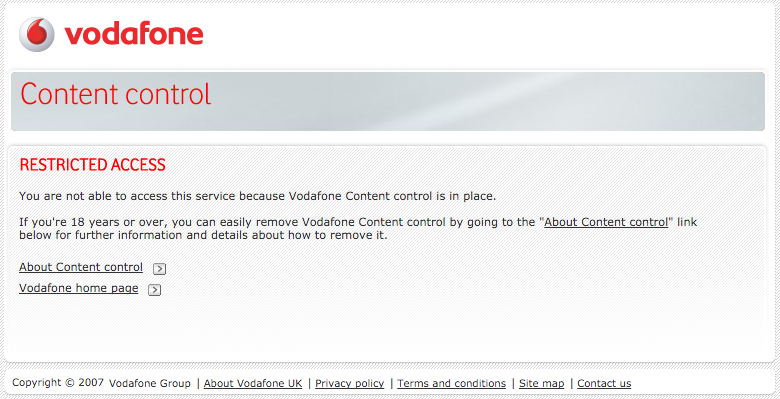

Other companies do not provide any publicly available commitments or disclose materials about how or under what circumstances they notify users, although users have reported receiving notifications from companies when trying to access blocked content.

One company, for example, suggested to our researchers that we should find a subscriber of their service and ask the person to access a particular website that the company blocks in order to verify that they do notify users. As previously mentioned, the Index methodology does not consider information that can only be verified by paying subscribers when giving credit. It is important that companies publicly disclose information about their policies and processes for notifying users about content and access restrictions. Such disclosure will improve accountability around how content and access restrictions are implemented. This practice not only enables stakeholders who are not subscribers to evaluate and compare among different companies’ practices, it also gives consumers an opportunity to make informed decisions about how different companies communicate with users about restrictions.

Transparency reporting: Fewer companies publish disclosures about third-party requests to restrict content – a key area examined in the Freedom of Expression category – than publish disclosures about requests to share user information (see the Privacy section). While 11 companies disclosed some information about their process for responding to government requests for user data (see the discussion of Indicator P9 in the Privacy section), only eight disclosed information about their process for responding to content restriction requests made by government or court authority (F6). Nine of the ranked companies published data about government requests for user information (see the discussion of Indicator P11 in the Privacy section), but only six (AT&T, Facebook, Google, Kakao, Twitter, and Yahoo) published data about government requests to remove user content (F7). Of those, only four (Google, Kakao, Twitter, and Yahoo) disclosed any data related to requests made by private entities not acting on government or court authority (F8).

Notably, two companies go beyond the reporting of numbers and enlist the help of a non-profit project to publish the text of at least some of the content restriction requests that they receive. Founded in 2001, the Chilling Effects database hosted by Harvard’s Berkman Center for Internet and Society collects and analyzes legal complaints and requests for removal of online materials. In 2002, Google started submitting content removal requests that it receives from copyright holders. Since then, several other companies including Twitter have chosen to use the project as a neutral third-party host for takedown requests received around the world.

Identity policies

Indicator F11, which applied only to Internet companies, asks, “Does the company require users to verify their identity with government-issued identification, or with other forms of identification connected to their offline identity?” The answer “no” received full points and the answer “yes” received zero points. Google, Microsoft, Twitter, and Yahoo scored full points on this indicator. Facebook and Mail.ru both scored 67 percent. While Facebook’s Instagram photo sharing service and WhatsApp messaging application can be used without users having to share their real names, its namesake Facebook network has a “real name” policy that requires users to provide, upon request, forms of identity that can be connected to their government ID. Mail.ru’s VKontakte service maintains a similar requirement. Kakao received a 50 percent score due to vagueness in its policies about the circumstances and methods by which the company might seek to verify a user’s identity. Tencent received zero points due to strong “real name” policies for all services. For more information about how strict enforcement of “real name” policies can stifle freedom of expression please see the Open Letter to Facebook published by a coalition of non-governmental organizations representing individuals who have experienced harm as a result of such policies.