Posted at 09:36h

in

Featured,

News

by Jessica Dheere

Original art by Paweł Kuczyński

As the country struggles to respond to COVID-19 and the 2020 elections approach, misinformation on social media abounds, posing a public health threat and a threat to our democracy. In RDR’s new report, “Getting to the Source of Infodemics: It’s the Business Model,” RDR Senior Policy Analyst Nathalie Maréchal, Director Rebecca MacKinnon, and I examine how we got here and what companies and the U.S. Congress can do to curb the power of targeted advertising to spread misinformation.

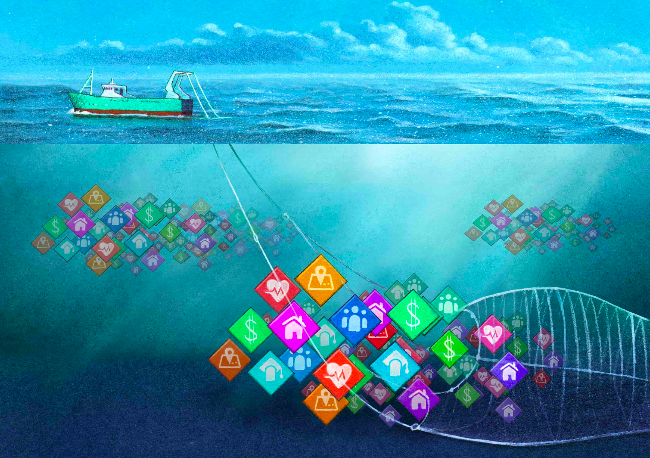

Targeted advertising relies on the processing of vast amounts of user data, which is then used to profile and target users without their clear knowledge or consent. While some other policy proposals focus on holding companies liable for their users’ online speech, our report calls for getting to the root of the problem: We describe concrete steps that Congress can take to hold social media companies accountable for their targeted advertising business model and the algorithmic systems that drive it.

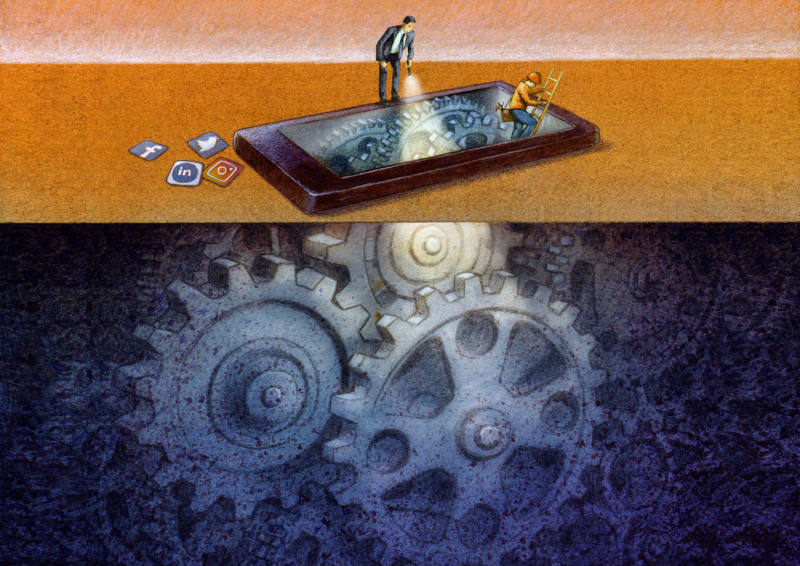

This is the second in our two-part series on targeted advertising and algorithmic systems. The first report, “It’s Not Just the Content, It’s the Business Model: Democracy’s Online Speech Challenge,” written by Maréchal and journalist and digital rights advocate Ellery Roberts Biddle, explained how algorithms determine the spread and placement of user-generated content and paid advertising and why forcing companies to take down more content, more quickly is ineffective and would be disastrous for free speech.

In this second part of our series, we argue that international human rights standards provide a framework for holding social media platforms accountable for their social impact that complements existing U.S. law and can help lawmakers determine how best to regulate these companies without curtailing users’ rights.

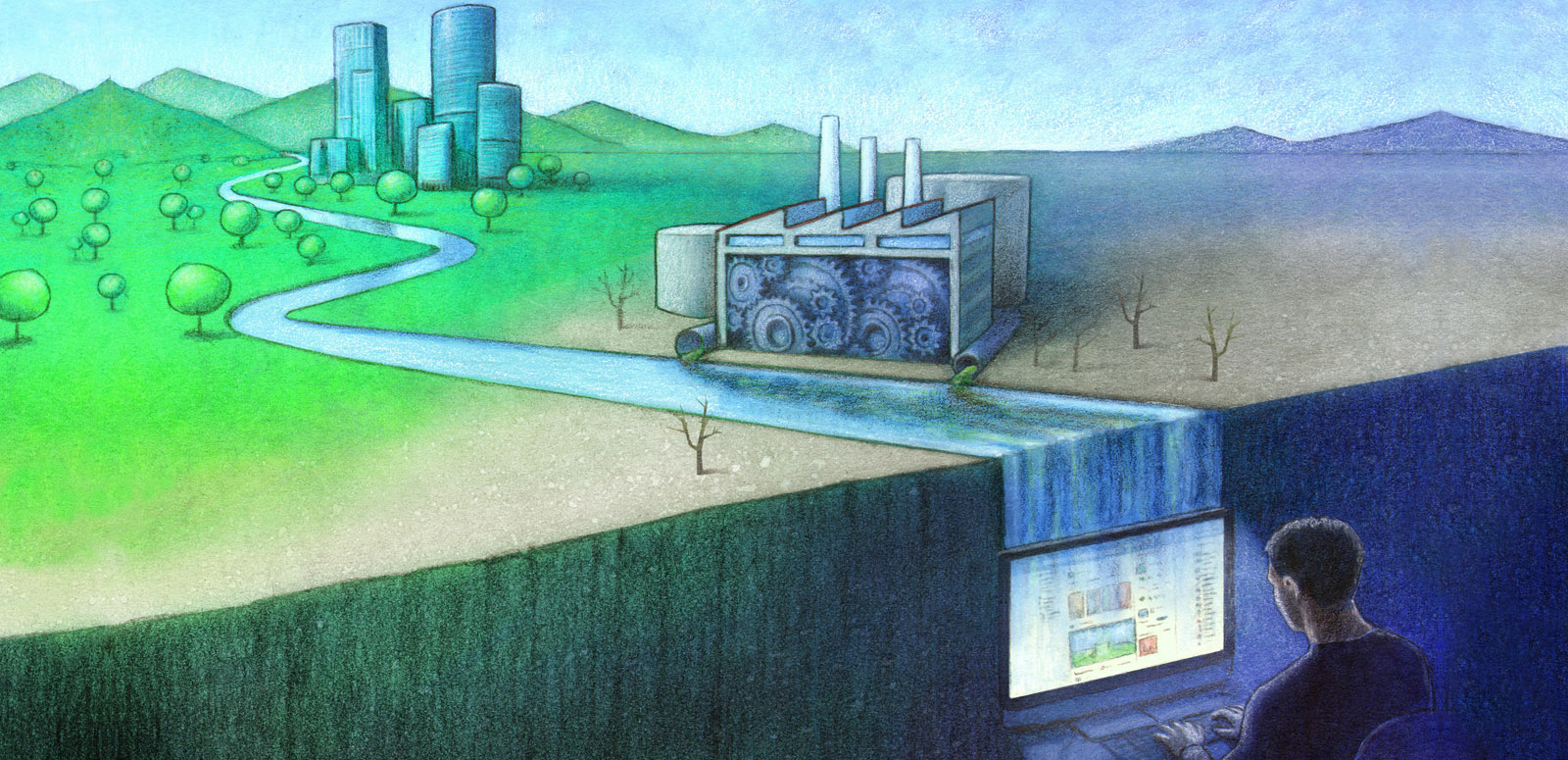

Drawing on our five years of research for the Ranking Digital Rights (RDR) Corporate Accountability Index, we point to concrete ways that the three social media giants have failed to respect users’ human rights as they deploy targeted advertising business models and algorithmic systems. We describe how the absence of data protection rules enables the unrestricted use of algorithms to make assumptions about users that determine what content they see and what advertising is targeted to them. It is precisely this targeting that can result in discriminatory practices as well as the amplification of misinformation and harmful speech. We then present concrete areas where Congress needs to act to mitigate the harms of misinformation and other dangerous speech without compromising free expression and privacy: transparency and accountability for online advertising, starting with political ads; federal privacy law; and corporate governance reform.

First, we urge U.S. policymakers to enact federal privacy law that protects people from the harmful impact of targeted advertising. Such a law should ensure effective enforcement by designating an existing federal agency, or create a new agency, to enforce privacy and transparency requirements applicable to digital platforms. The law must include strong data-minimization and purpose limitation provisions. This means, among other things, that users should not be able to opt-in to discriminatory advertising or to the collection of data that would enable it. Companies must also give users very clear control over collection and sharing of their information. Congress should restrict how companies are able to target users, including prohibiting the use of third-party data to target specific individuals, as well as discriminatory advertising that violates users’ civil rights.

Second, Congress should require that platforms maintain a public ad database to ensure compliance with all privacy and civil rights laws when engaging in ad targeting. Legislators must break the current deadlock and pass the Honest Ads Act, expand the public ad database to include all advertisements, and allow regulators and researchers to audit it.

Finally, Congress should require relevant disclosure and due diligence around the social and human rights impact of targeted advertising and algorithmic systems. This means mandating disclosure of targeted advertising revenue, along with disclosure of environmental, social, and governance (ESG) information, including information relevant to the social impact of targeted advertising and algorithmic systems.

Political deadlock in Washington, D.C., has closed the window for lawmakers to act in time for the November 2020 elections, but this issue must be a bipartisan priority in future legislative sessions. In the meantime the companies should take immediate, voluntary steps to anticipate and mitigate the negative impact of targeted advertising and related algorithmic systems on the upcoming elections: We call on Facebook, Google, and Twitter to curtail political ad targeting between now and the November elections in order to dramatically reduce the flow and impact of election-related disinformation and misinformation on social media.

Please read the report, join the conversation on Twitter using #itsthebusinessmodel, and email us at itsthebusinessmodel@rankingdigitalrights.org with your feedback and to request a webinar for your organization.

We would like to thank Craig Newmark Philanthropies for making this report possible.