09 Jun UK Government calls for increased internet regulation, Brazil holds hearings on WhatsApp blocking, and Weibo users face restrictions on Tiananmen anniversary

Corporate Accountability News Highlights is a regular series by Ranking Digital Rights that highlights key news related to tech companies, freedom of expression, and privacy issues around the world.

UK Government calls for increased internet regulation following terror attack

UK Prime Minister Theresa May (Image via Number 10, licensed CC BY-NC-ND 2.0)

In response to the most recent terror attack in London, UK Prime Minister Theresa May is calling for tighter internet regulations in order to combat terrorism. May said she plans to pursue international agreements to regulate cyberspace and criticized internet companies for providing “safe spaces” that allow extremists to communicate.

Adding to May’s comments, UK Home Secretary Amber Rudd said the government wanted tech companies to do more to take down extremist content and to limit access to end-to-end encryption.

In response, some tech companies defended their efforts to identify and remove extremist content. “Using a combination of technology and human review, we work aggressively to remove terrorist content from our platform as soon as we become aware of it,” according to Facebook.

Human rights advocates warn that government attempts to regulate the internet could threaten freedom of expression and that efforts to address online extremist content must be consistent with international human rights norms. According to David Kaye, UN Special Rapporteur on freedom of expression, efforts to combat violent extremism can be the “perfect excuse” for both authoritarian and democratic governments to restrict freedom of expression and control access to information.

Brazil’s Supreme Court holds hearings on WhatsApp blocking

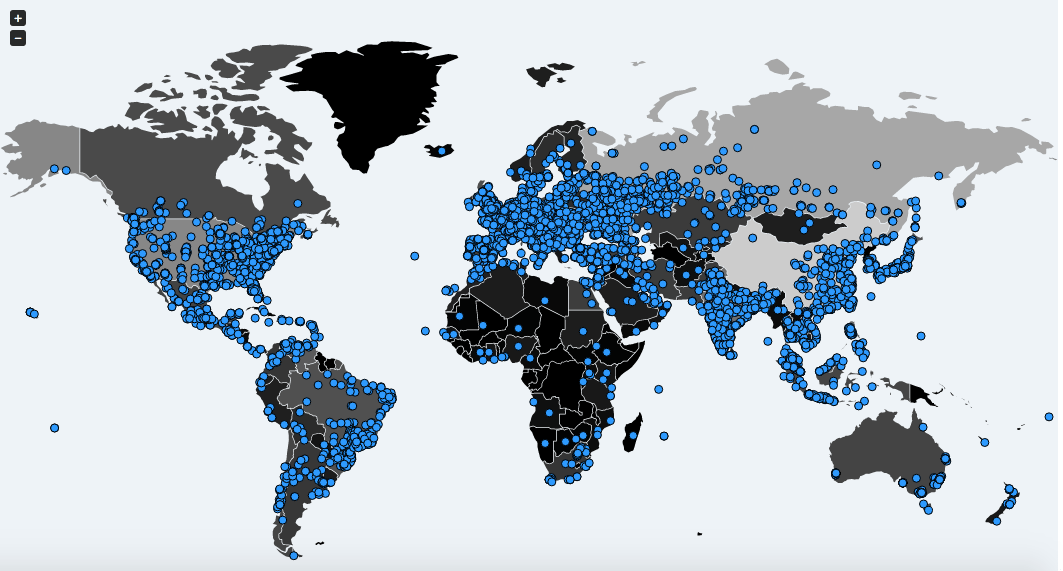

Brazil’s Supreme Court has held hearings to decide whether it is legal for courts to direct telecommunications companies to block apps like WhatsApp. Lower courts on numerous occasions have ordered telecommunications companies to block WhatsApp (which is owned by Facebook), after the company refused to turn over user information requested as part of criminal investigations. Judges in these cases based their rulings on an interpretation of Brazil’s Marco Civil law, which some civil society groups argue is an improper application of the law.

WhatsApp co-founder Brian Acton, who testified at one of the hearings, explained why the company is unable to turn over user information, “Encryption keys relating to conversations are restricted to the parties involved in those conversations,” Acton said. “No one has access to them, not even WhatsApp.” The court is expected to issue a ruling on the matter in the next few weeks.

Of the eight messaging and VoIP services evaluated in the 2017 Corporate Accountability Index, WhatsApp earned the highest score for its disclosure of encryption policies. The company disclosed that transmissions of user communications are encrypted by default using unique keys, as well as that end-to-end encryption is enabled for users by default.

Weibo users censored on Tiananmen anniversary

Users of Chinese social network service Weibo faced restrictions when attempting to post photos or videos on the platform during the anniversary of the 1989 Tiananmen Square pro-democracy protests. Users outside of China were unable to post photos or videos, and users within China also reported that they were unable to change their profile information or post photos and videos as comments during this time.

Although references to Tiananmen Square are regularly censored in China, both by the government and by technology companies, censorship is often heightened in early June each year, as documented by groups such as Freedom House. In a post on June 3, Weibo said that some of the platform’s functions would not be available until the 5th due to a “systems upgrade.” Comments on the post were disabled, and according to a Mashable reporter, “when we attempted to post a comment saying: ‘I want to comment,’ a notice popped up saying that the comment was in violation of Weibo’s community standards.”

As research in the 2017 Corporate Accountability Index showed, Chinese companies are legally liable for publishing or transmitting prohibited content, and services that do not make a concerted effort to police such content can be blocked in China.