30 May RDR Turns 10! — And Looks to the Future

It’s our 10-year anniversary, which means that RDR has been keeping tech companies accountable for over a decade. In honor of this occasion, the RDR team held its first-ever in-person retreat to set our new strategic priorities! It was a fantastic opportunity to get the team together, and also the first time some of our remote team members met in person. Our team arrived from Washington, D.C., Barcelona, Paris, and Montreal.

While we marked this milestone for RDR, we in the digital rights community at large also find ourselves at an inflexion point: Almost every week, tech companies are announcing the integration of artificial intelligence into their services. Our research seems, today, more crucial than ever. With the integration of algorithm transparency and targeted advertising standards into our methodology in 2020, RDR has always kept abreast of trends in the field. Yet, the speed of evolution in AI today is unprecedented. It calls for us to evolve like never before.

With this in mind, our objectives for the retreat were:

- Examine both our accomplishments and our challenges over the past decade. Based on this assessment, determine how we can have the most impact moving forward in a rapidly changing tech landscape.

- Determine ways to refine our methods and standards (to allow for more flexibility and to allow us to build new products, including mini indexes!) and consider how we can best strengthen and fortify our relationships with civil society partners and the investor community.

- Determine upcoming dates for the release of our next Corporate Accountability Indexes.

The Retreat: RDR’s Strategic Priorities

On day 1, we met in Amsterdam and headed toward our venue.

Over the next 3 days, we determined 5 strategic areas that RDR will prioritize moving forward:

- Setting the standard: Our standards should remain relevant and enhance our collaborations with all stakeholders including benchmarks, regulators, policymakers, investors, and ESG rating agencies. This means making sure our standards are flexible and can be deployed to evaluate both new and emerging technologies.

- Fostering actionable methodology: We identified the need to ensure our methodology is more flexible and efficient, and therefore adaptable to both new technology and to a wider array of companies. We also explored ways of providing more targeted information to inform specific policy issues, further extending the work we have begun with our Scorecard “Lenses.”

- Catering to investor needs: RDR’s work with investors has grown in recent years. Over the past two years, our data has been employed to great success in a growing number of investor proposals. RDR has directly engaged in many of these. Some of the most successful include:a) A proposal at Alphabet (Google) that informed the company’s decision to terminate its FLoC targeted advertising project.

b) A proposal at Meta calling for a human rights impact assessment of its targeted advertising business model, which became the most successful topical shareholder proposal in Meta’s history.

This year we are once again actively partnering with investors to bring human rights issues to the table at companies like Amazon, Google, and Meta through shareholder proposals at their upcoming annual meetings. In particular, we conceptualized and developed the first ever proposal on transparency reporting filed at Amazon (and likely at any e-commerce company). The success of these proposals and of our investor work more broadly has made clear the value of an investors-first approach to our work. For this reason, we will begin mapping out investor needs and set out to address them as directly as possible in our upcoming indexes.

- Growing the movement: The number of civil society partners with whom we engage has also grown, with RDR currently involved in supporting 12 adaptations covering 34 countries and 122 companies. This means a slew of new data has become available, including on subsidiaries of the companies we rank. This data can also be useful for the investor community, among others. We will work to both expand and refine the release of such reports.

- Communicating smarter: RDR will work to ensure that the stories that emerge from our data translate easily into media-friendly stories. We will ensure the media is aware that the RDR staff is able to speak on a number of topics and trends and not just our Scorecards. Some of these include: investors, Big Tech and Telcos, targeted advertising and algorithms, and other important questions related to emerging technologies like AI.

Looking Forward: What’s Next for RDR?

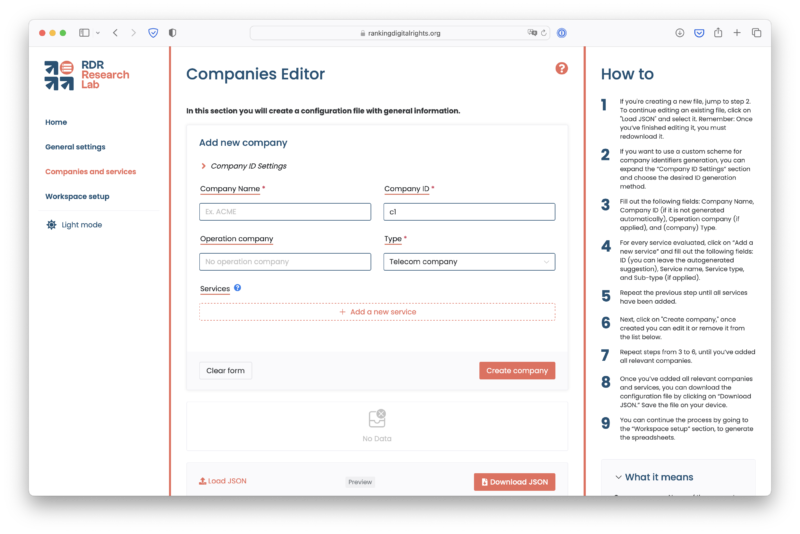

While RDR will spend the remainder of 2023 publicly reflecting on a decade of lessons learned in tech-sector accountability for human rights, to keep up with the rapid advances in new technology, RDR is also taking advantage of our non-Index year to work on the development of new standards for artificial intelligence. These will be shared publicly at the end of this summer. We’re also taking stock of how our methodology and standards can be refined to best serve the needs of our stakeholders, including investors, as well as companies and civil society, when our next Corporate Accountability Indexes are released.

With this in mind, RDR is happy to announce that our next Big Tech Scorecard will be out in the fall of 2024. And our Telco Giants Scorecard will be released in 2025. Also, look out for: New mini indexes on emerging technologies, coming soon!

Stay tuned!